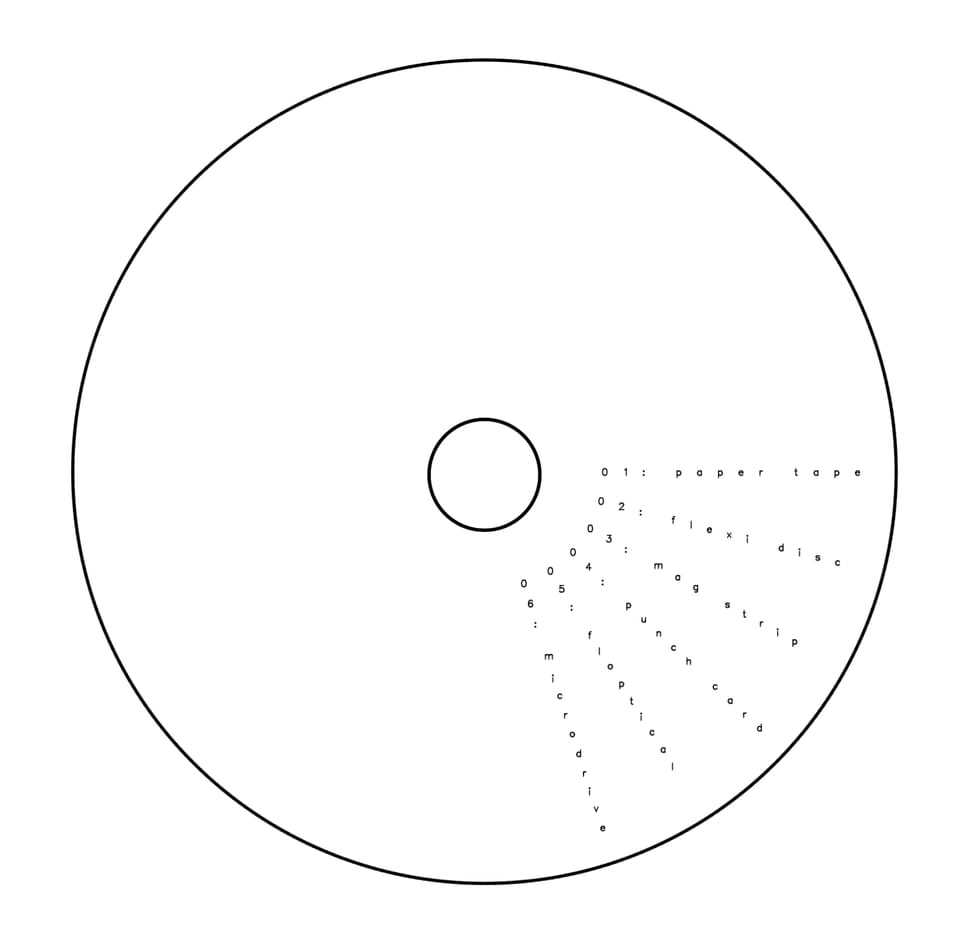

ADVENT #6 — zx microdrive

In the last installment, I said that all iteration in Alice was done by Y combinator. This is not quite true. At least one macro in the standard library, ‘do’, completely unrolls loops when the size is static. Here’s some output from the system profiler after a few interactive loops on the same tape.

$ (do 1 (add 1 2))

initial registers: {"pc":3896,"fp":4201,"r1":0,"r2":0,"threads":0,"deferred":1}

program tape start: 0

program tape end: 4265

actual tape end: 4705

$ (do 10 (add 1 2))

initial registers: {"pc":8823,"fp":9140,"r1":0,"r2":0,"threads":0,"deferred":4706}

program tape start: 4705

program tape end: 9206

actual tape end: 9813

$ (do 100 (add 1 2))

initial registers: {"pc":16271,"fp":16588,"r1":0,"r2":0,"threads":0,"deferred":9814}

program tape start: 9813

program tape end: 16654

actual tape end: 19031

$(do 1000 (add 1 2))

initial registers: {"pc":48889,"fp":49206,"r1":0,"r2":0,"threads":0,"deferred":19032}

program tape start: 19031

program tape end: 49272

actual tape end: 59162

The differences here between program tape end and actual tape end are that the compiler generates everything from program tape start to program tape end. The difference between program tape end and actual tape end is all of the feeds done by the program and all of the deferred cells created by the runtime while scheduling.

In the first case, the size of the tape is dominated by the size of compiling the standard library. 4705 tape cells are used between that and the useless single iteration of (add 1 2). Follow that through to the thousand iteration case and, there, around 30 thousand cells go into the tape for the program and around 10 thousand cells are generated at runtime.

Is this staggeringly inefficient? Yes. Do I really care? Not at the moment. Each iteration is inefficient because I can’t be bothered to improve it but I think there is performance lying on the floor to be claimed. Overall, unrolling the loop is generally reasonable. A purpose of the system is to produce a record of the execution as a byproduct of the execution, and for that record to have been used in the execution, not simply emitted as a dead artifact. If you’re doing that anyway then unroll with gusto. Most other instruction trace systems are merely lying. They lack fidelity in the first instance, or they throw it away to manage the size of the traces, or they require so much complexity to process that it would have been faster to simply instrument the execution in the first place and accomplish the intended program analysis or debugging or whatever there.

Speaking of loops, today’s removable media is the Sinclair Spectrum ZX micro drive. This system was essentially a miniature version of 8-track audio tape system. The 8-track had a head that could be mechanically moved to four positions to pick up pairs of tracks containing left and right stereo channels. The tape cartridge was arranged internally as an endless loop on a spool. A single pair of channels could play for around 2o minutes and the player would automatically switch to the next pair at the end. Four trips through the tape with the head in each of four positions got around 80 minutes of audio.

The Sinclair micro drive kept the endless loop but used only one track. That track ran at double the speed of the 8-track system but only had enough media for about 8 seconds. In this way it was somewhat like a floppy disk with a single large track. The total data capacity of the micro drive was about 85 kilobytes, just over half that of a contemporary Commodore single-sided, double-density floppy disc. Many floppies of that era ran at 300 RPM, meaning that the revisit time on a sector as the disk rotated was 200 milliseconds. The speed of the media under the head was dramatically faster in the floppy case, but actual floppy data transfer speeds were much more modest in practice, limited mostly by external IO interfaces on early home computers.

The biggest problem with the Sinclair drive … the biggest problem cited with the drive was that the tape stretched. The real problem was the culture of Sinclair itself. Sir Clive Sinclair was endlessly creative — so much so that I can’t capture it here. The biggest problem is that quality control was terrible in essentially all of his endeavors as a result of a fanatical drive to deliver exciting technology at low cost. The problem with mass production is that volume drives cost, not the other way around. Products that are produced over time in large volume become cheap almost as a side effect of being manufacturable. Products that are designed to be made from cheap components do not seem to become manufacturable in the same way. The micro drive was a good example — conceived as cheaper than the floppy and never brought even to the cusp of reliability. Floppy volumes brought the cost down below the microdrive so fast that it became irrelevant. Sinclair kept repeating the same mistake when it eventually did release floppy disks — floppy technology but in completely oddball sizes. Many American hobbyists (and the small businesses that were essentially capitalist avatars of individual hobbyists) in the Timex/Sinclair ecosystem turned to adaptors for other floppy drives more widely available in the US market. These were often more expensive than the underlying Sinclair system — they often had more processing power and as much memory as the Sinclair itself. Why were people willing to spend more on peripherals than on the machine? Why more on the machine plus peripherals when other packaged computers were available for less? There are at least several reasons.

I’m writing this post (and running ADVENT) on an Apple iPad Pro 12.9 from 2022. The keyboard case that I use with it cost hundreds of dollars new — more than an inexpensive Chromebook and almost as much as a low-end iPad. I‘m doing it for some of the same reasons that worked in the Sinclair era. Partly, I like the iPad ecosystem and especially the transparent cellular connections unavailable in most laptops. Also, I got the keyboard used for a fraction of the original price. Many peripherals in the 80’s home computer era had second or third lives as hand-me-downs or swap meet finds. These market dynamics are as much a part of the ecosystem as the relationship between hardware and software.

Building a working emulator and a building a good program analysis tool are similarly hard but seem to attract different types of hackers. I think it’s like the incremental peripheral ecosystem for the Spectrum. Half a program analysis tool seems useful, while half an emulator seems useless. Even when they are exactly the same piece of software.

Member discussion