Is all this even leg … ible?

Much of the recent mail here at the Paper Tiger offices is from concerned folks wondering if all that’s happening is even legal. Fortunately, most of this mail is actually meant for the public interest law firm down the street. We simply have no idea – the only judging we do here is the silent kind and then mostly about whether you put milk in coffee too early in the day.

We do have opinions about one of the other cousins of proto-indo-european ‘leg’ — namely legible. I remember a lot of attention to readable code early in my career, though what readable exactly meant was unclear. Maybe the standard was whether would I bother to even look at a piece of code that purported to do something before adding my own broken version to the general fund of human knowledge. In this case, readable is a very personal thing. Legible is completely different — concerned with whether the glyphs and their order can be deciphered. Legible is why many programmers favor the slashed zero. Legible used to be a big deal when programs were drafted on code pads and hand punched. While legible sounds like a problem that could have been completely solved by direct entry on video terminals some time in the Carter administration, it actually got worse.

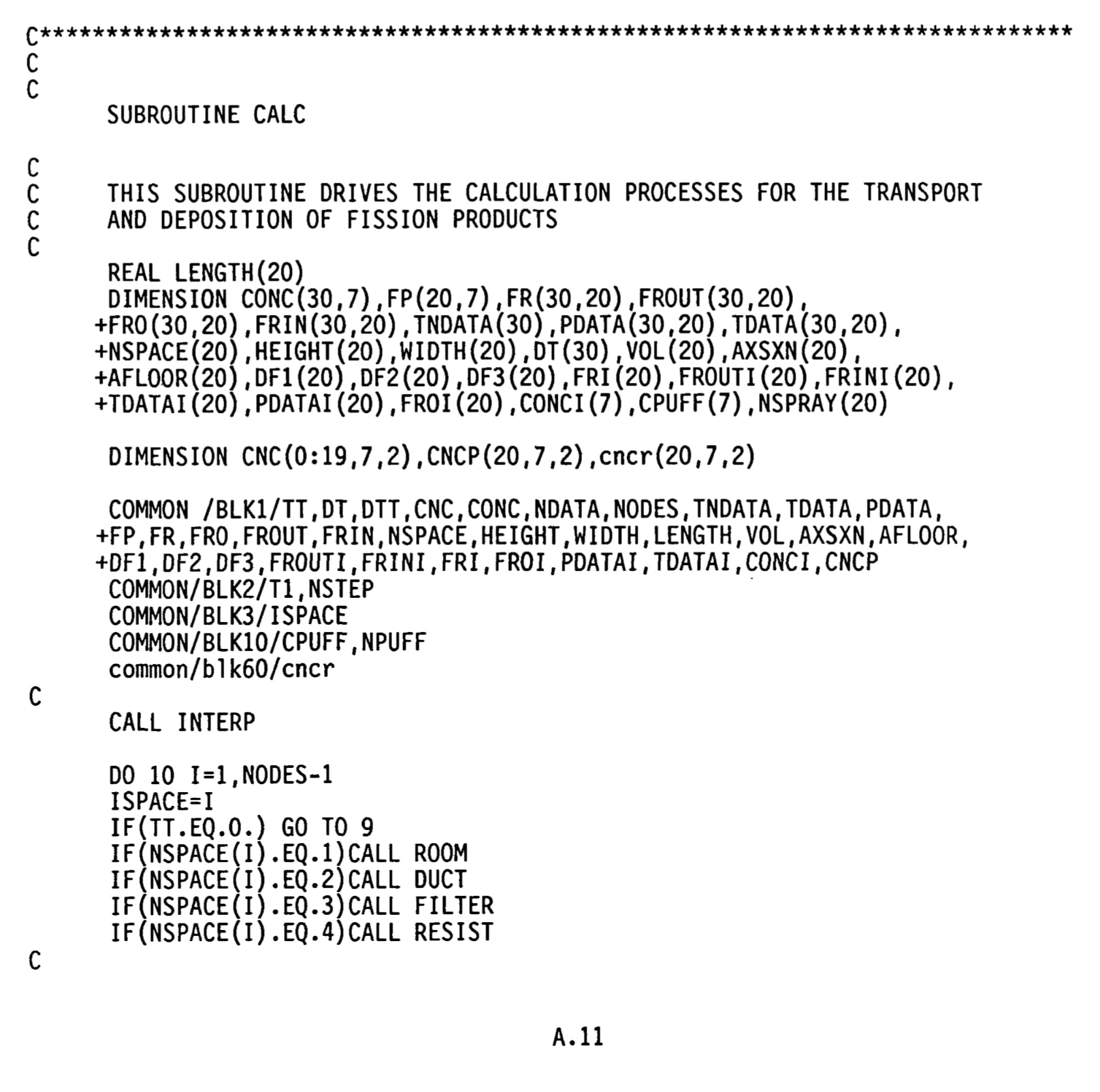

The wide adoption of portable programming languages and interoperable families of computer meant that program listings were suddenly much more useful as scholarly artifacts. Papers began sprouting appendices in FORTRAN decades before they would be easy to share online. Where mathematicians had spent centuries ensuring their theorems and geometrical constructions could be readily interpreted and copied prior even to the advent of moveable type, FORTRAN program listings were run off on the near-near-letter-quality line printer and dumped into papers as camera-ready documents. Many of those documents have since been digitized as low-resolution bitmaps and preserved only this way.

So we’re going to model the transport and deposition of nuclear fission products. Are you sure you typed that in correctly? I think the example above is actually a very good example.

Around the same time, legibility became a major concern in the emerging home computer market, where program listings were often shared in magazines with the understanding that users would type them in by hand. Both the Atari 8-bit magazine of record (ANTIC) and Compute! Magazine offered basic program listings with checksum values that allowed their amateur readers to validate programs after typing in page after page of listing.

Does legibility still matter — especially in an age when Stack Overflow offers to copy code snippets directly to the clipboard? I think it does. I have spent my career using one computer or another that were not connected to anything like the internet for whatever reason. These machine may offer some kind of runtime — perl, or python, or C, or whatever but no support for magic dependencies or repositories. I once had a Rohde & Schwarz logic analyzer with terrific hardware but absolutely none of the interesting software. It had Microsoft Explorer, though, because that‘s how the help files worked. Explorer’s Javascript runtime was powerful enough to demodulate locally-captured IQ samples but I had to key the demodulator into notepad.exe.

One of the first little snippets I usually write is a minimal REPL powerful enough to write a better one. For a recent such exercise, I decided to finally add a better JavaScript rewriter to allow me to play a particular JavaScript reindeer game that would have been trivial in a Scheme dialect with macros but which has long been needlessly complicated in JavaScript’s dialect of Scheme. I‘ve always avoided it because I never wanted to type in a whole lexer when I could instead be doing real computering. So what is legible, anyway? I can’t say for sure, but I know it when I see it. Can I read it without easily losing my place? Can I discern each glyph? Are there n-grams that are themselves intelligible enough that I can quickly scan them? I don’t think that understanding the meaning or function is a big part of it. Why should it be? Even ciphertext needs to be legible.

The Soviet cipher beneath VENONA was based on messages of six five-letter code groups and a five-digit number, like:

UETWZ UREEO ZTTTU ЕТРЕР TRART ZURAP 33217

That’s perfectly legible but imperfectly unintelligible, as would later be revealed when decrypts were made public.

Anyhow, here’s my own minimal (and almost certainly broken and incomplete) JavaScript lexer written to be easy to type. Accepts a string and returns a lexed string with tokens delimited by ‘#’ and where ‘|’ represents the point at which lexing stopped. ‘#’ and ’|’ in the original text are replaced by HASH and PIPE. I claim only that I value the legibility of this over either performance or intelligibility.

let lex = function(toplevel_expr_str) {

let s = "|" + toplevel_expr_str.replace(/\|/g,"PIPE").replace(/\#/g,"HASH");

let lang = [

"(/[*]([^*]|([*][^/]))*[*]/)", // c-style comments

"(//[^\n]*\n)", // c++-style comments

"([ \n\t]+)", // whitespace

"(/((\\[/])|[^/])+/[a-zA-Z]*)", // regex

"(0x[0-9a-fA-F]+)", // hex numbers

"([0-9]+([.][0-9]*){0,1}", // decimal numbers

// multi-char assignment, equality

"(===)|(==)|(\\+=)|(-=)|([*]=)|([/]=)|(%=)|([*][*]=)|(>>=)|(<<=)|(!=)|(PIPE=)|(^=)|(&=)",

// other multi-char operators

"(\\+\\+)|(--)|(PIPE)|([.][.][.])|(=>)|([*][*])|(>>)|(<<)",

// delimeters, braces, single char operators

"[;,:[{()}\\]~!$%^&*-+=.<>?/]",

"([_a-zA-Z][_a-zA-Z0-9]*)", // symbols

"(\"((\\\")|([^\"]))*\")|('((\\')|([^']))*')", // strings

];

let langr = new RegExp(

// everything up to the current token

"^([^|]*)\\|" +

// the current token

"(" + lang.join("|") + ")"

);

let l;

do {

l = s.length;

s = s.replace(langr,"$1#$2|");

} while(s.length > l)

return s;

};

I think, perhaps counterintuitively, that intelligibility comes at a cost of legibility — an intriguing trade space.

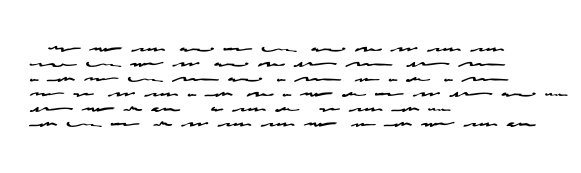

Looking instead for illegible? Try the free MumbleGrumble font — a sort of a nihilistic Reenie Beanie:

Member discussion